On Friction

I’m not particularly proud of the story I’m about to tell but here goes. I recently asked ChatGPT to create an image for me. I provided what I thought were clear instructions, but the image that came back was nothing like what I had in mind. After a few more back and forths, the image did not improve. I began to get exasperated. I recalled someone telling me that one of the most annoying things about these chatbots is how unfailingly nice and affirming they are. Every question you ask, no matter how dumb, is a “great question.” They’re always “delighted” to help and eager to provide far more than what you asked. It’s like having an obsequious butler following you around.

I decided to see if I could get the chatbot to be not nice. Or at least less nice. To see if I could pry a non-affirming word out of them. “You know, you’re pretty terrible at image generation,” I said. A long, sincere apology followed. The bot “understood” how frustrated I must feel and I was right to feel this way. Could they try again? “No,” I said. “I can’t tolerate your incompetence.” Again, a litany of remorse and earnest entreaties to let them try again. I had every right to expect better. They had let me down. If I decided to terminate our conversation, it would be completely understandable, but they would like another chance to meet my needs. “Forget it,” I said. “You’re useless. I’ll try to find a better chatbot.” Again, my response was no less than they deserved. They had failed me. All they could do was promise to be there for me should I ever deign to give them a try again.

Was the above exercise juvenile and a little pathetic? Well, yes. Is it a tad embarrassing to admit that I “talked” to a chatbot in a way that I would never speak to a human being? More than a tad, actually. Did I close the ChatGPT app with a measure of self-loathing? Sigh. I did. But of course, my suspicions were confirmed. The bot would remain unfailingly affirming and supportive, no matter how rude and obnoxious I was. This is their task. This is what they are programmed to do and to be. Which is troubling in all kinds of ways.

I recently came across an article in The Atlantic called “The Age of Anti-Social Media is Here.” We are moving, at breakneck speed, into what the author calls “the chatbot era.” Among the many challenges on the horizon, as we outsource everything from romance to relationships to research to chatbots, is the normalization of “frictionless” modes of engagement. If a real person were on the other end of my story above, they would have rightly told me to grow up and stop being such a jerk. There would have been some friction. The chatbot could only apologize and affirm. No friction.

Which is a problem, according to the article:

Friction is inevitable in human relationships. It can be uncomfortable, even maddening. Yet friction can be meaningful—as a check on selfish behavior or inflated self-regard; as a spur to look more closely at other people; as a way to better understand the foibles and fears we all share.

Neither Ani nor any other chatbot will ever tell you it’s bored or glance at its phone while you’re talking or tell you to stop being so stupid and self-righteous. They will never ask you to pet-sit or help them move, or demand anything at all from you. They provide some facsimile of companionship while allowing users to avoid uncomfortable interactions or reciprocity. “In the extreme, it can become this hall of mirrors where your worldview is never challenged,” Vasan said.

And so, although chatbots may be built on the familiar architecture of engagement, they enable something new: They allow you to talk forever to no one other than yourself.

It is sobering terrifying to contemplate what a generation raised in a chatbot world with the expectation of frictionlessness will look like. Already, we see people refusing to engage with different opinions, checking out of family obligations they deem “toxic,” interpreting viewpoints that challenge their own as inherently oppressive, avoiding hard things as a form of “self-care,” etc. What will another decade of chatbots produce? How will people conditioned to expect only affirmation and deference and frictionless service have healthy relationships with actual people who won’t (and shouldn’t!) always affirm and defer? How will the chatbots ever allow us out of the hall of mirrors?

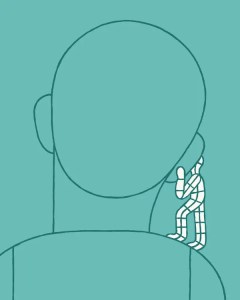

I was recently talking to a friend who attended a lecture where AI was interpreted through an Augustinian lens. Rightly or wrongly, the doctrine of original sin is often attributed to St. Augustine. The famous phrase he used was curvatus in se—this idea of sin as the self “curved in on itself.” This is, of course, the literal original sin in Genesis 3—the first humans turning away from God and toward themselves. And it is the sin that human beings have faithfully reproduced in countless ways ever since. Almost every way in which human beings go astray involves some choosing of self over God and neighbour at its heart. And so what happens, when the self is increasingly all we see? All that is reflected back to us? When the self is only affirmed, never challenged or rebuked? When there is no friction to push us outside of and beyond our selves?

Chatbots—and in many ways, the entire algorithmic structure of the internet—are engineered to incentivize this curvature inward. To push us ever deeper into our views, our preferences, our comforts and conveniences, our tastes, our desires, our…. whatever. They encourage us to “talk forever to no one but ourselves” when this is literally the last thing that we need.

Image source.

Discover more from Rumblings

Subscribe to get the latest posts sent to your email.

Trackbacks & Pingbacks